Soojin Park

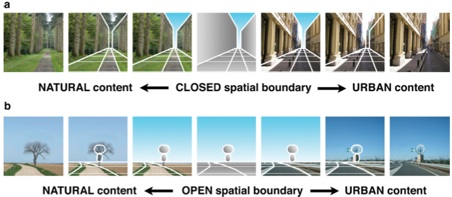

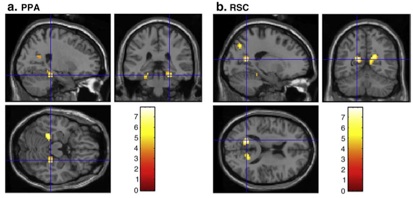

Human scene categorization is remarkably rapid and accurate, and there have been many theories and computational models suggesting what constitutes a scene: is it objects that consists a scene (e..g, whether the scene contains trees or buildings) or is it the spatial structure of a scene (e.g., whether the space is very open or closed). Using fMRI multi-voxel pattern analysis, we examined whether the neural representation of scenes reflect global properties of scene structure, such as openness of a space, or properties of surfaces and contents within a space, such as naturalness. Interestingly, in the PPA, there was high confusion across scenes that had similar spatial structure; on the other hand, the LOC (lateral occipital complex, a region sensitive to objects), showed high confusion across scenes that contained similar objects. These results suggest that there are multiple visual brain regions involved in analyzing different properties of a scene.

Among many properties that consists a scene, I am interested in functional properties of a scene, for example, whether scenes are highly navigable or non-navigable. Functional properties are important for daily human interactions with the world, and can influence people’s action and navigation. I’m interested in how different brain areas represent these functional properties of a scene, compared to visuo-spatial properties.

So far, I have described my work exploring how the mind overcomes the ambiguity of perception by its constructive nature of scene representations. In addition to examining how the visual system overcomes limits in sensory input, I am also interested in how the mind overcomes limits in cognitive resources such as attention or memory to optimize human performance. We can reveal the architecture of how information is processed in the brain by studying which tasks interfere with each other, or which task contexts influence the encoding of stimuli. I have used visual working memory and attention, as well as behavioral priming, to measure optimized human performance. For more details, please visit my Publication page: Park et al., 2007, JEP:HPP

The human visual system can sample a single snapshot of a scene at a time, and can only keep track of a handful of objects in the environment at any given moment. Yet people can effortlessly navigate through an unfamiliar city, comprehend fast movie trailers, and find a friend in a crowd. To overcome limits in visual processing, the human visual system has developed mechanisms to perceive a coherent visual world beyond the fragmented input it receives, as well as a proficiency in allocating its limited memory and attentional resources to represent a scene. My research program in cognitive science aims to understand how the human mind constructs such a visual reality beyond the constraints of sensory inputs.

Visual perception and memory are constructive processes. For example, when we scan the world, we are constantly updating scene representations, as well as making predictions about what we might see outside the view. To do this, we are constantly moving our eyes and head to scan the environment, resulting in multiple fragmented visual inputs. It is fascinating how our visual system creates a continuous and meaningful world with such multiple discrete views of our environment. Using fMRI, I am interested in studying how scene-selective regions of the brain constructs a representation that integrates multiple views, and that is extrapolated from a current view.

How about extrapolating beyond what is presented in front of you? For example, humans have a very limited visual field. However, we can easily extrapolate what’s in our current visual field, and perceive a continuous environment beyond what we have in front of our eyes. To allow perception of a continuous world, the human brain makes highly constrained predictions about the environment just beyond the edges of a view. With fMRI, I tested neural evidence for extrapolation of scene layout information beyond what was physically presented, an illusion known as boundary extension.

So far, my research has focused on functions of two scene-sensitive regions of the brain, the parahippocampal place area (PPA) and retrosplenial cortex (RSC). I am interested in expanding the network of scene regions and looking at the dynamics and interactions across these brain areas.

When different snapshot views from panoramic scenes are presented in order (as in the below example), people have no trouble seeing these three panoramic shots as coming from the same scene. Does the brain represent these three views as an integrated scene too? Using fMRI adaptation, we tested whether the PPA and RSC treated these panoramic views as the same or different.

Results demonstrate that the PPA and RSC play different roles in scene perception: the PPA focuses on selective discrimination of different views while RSC focuses on the integration of scenes under the same visual context. For more details, please visit my Publication page: Park & Chun, Neurimage, 2009

Properties of a scene

Constructive nature of scene perception

Results from Park et al., 2007 demonstrate that scene layout representations are extrapolated beyond the confines of the perceptual input. Among many brain regions, RSC showed the strongest evidence for boundary extension. Such extrapolation may facilitate perception of a continuous world from discontinuous views. For more details, please visit my Publication page: Park et al, Neuron, 2007

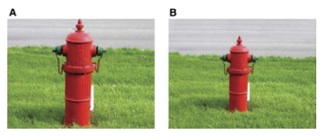

After viewing a close-up view of a scene (A), observers tend to report an extended representation (B). For example, when they were later shown the same scene (A), they say that it looks more close-up than what they originally saw. Instead, when they are presented with an extended scene (B), they will report that they perceive it as identical to the original (A). This phenomenon is called boundary extension. (Intraub and Richardson, 1989).

Overcoming the limits in cognitive resources