How is the spatial boundary of scenes represented?

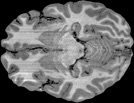

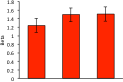

The ability to navigate in the world is intrinsically tied to the ability to visually analyze spatial features of a scene. For example, analyzing the size of space or height of the boundary can influence how you locate an object or orient in a space. Using behavioral, fMRI, and developmental experiments, we find that high-level visual cortex that underlies scene processing is remarkably sensitive to different types of spatial boundary features. We also find that such sensitivity to visual boundary feature is impaired in people who are developmentally impaired in real world spatial navigation.

Neural representation of scene boundaries

Ferrara & Park. (2016) Neuropsychologia

Impaired behavioral and neural sensitivity to boundary cues in Williams syndrome

Park, Ferrara, & Landau. (2015) Vision Sciences Society Poster

Parametric coding of the size and clutter of natural scenes in the human brain

Park, Konkle, & Oliva. (2015). Cerebral Cortex

NAVIGATION

VISUAL SCENE BOUNDARIES

NEURAL PATTERNS

?

How does the visual system represent the functionality of a scene?

The human visual system needs to make a sophisticated decision about the functional affordance of a scene, for example, whether the scene is easily navigable, whether there is a major obstacle on the way, and which direction to continue walking. In this research, we ask whether the visual system represent the functional scene property, what features/combination of features determine such functionality, and whether such functional representation can be distinguished from other visuo-spatial scene representations.

Neural representation of the navigability in a scene

Park, S. Vision Sciences Society Talk abstract 2013

Neural similarity measured by Repetition Suppression and MVPA in fMRI

To test similarities in neural representation of scenes and objects, our lab uses both fMRI repetition suppression (RS) and multi-voxel pattern analysis (MVPA) methods. Despite the fundamental similarity between the two methods, there is growing evidence that finds discrepant results between RS and MVPA. Recent work from our lab provides a novel working hypothesis that can account for why RS and MVPA results might diverge and what conclusions we can draw about a neural representation based on conflicting results from the two methods.

Neural representation of object orientation: A dissociation between MVPA and Repetition Suppression

Hatfield, McCloskey, & Park, S. (2016). NeuroImage

Conjoint representation of texture ensemble and location in the Parahippocampal Place Area

Park, & Park, Society for Neuroscience Poster 2014

MULTI-VOXEL PATTERN

REPETITION SUPPRESSION

NEURAL SIMILARITY MEASURES

?

What are neural mechanisms that support the construction of coherent scene perception?

We constantly move our eyes to overcome the limits of our visual acuity outside the fovea. How do we perceive the rich details of each view, while keeping the coherent percept across eye movements? In a series of fMRI experiments, we have proposed that this is accomplished by taking advantage of the functional architecture of scene coding across distinct scene processing areas, in particular, the parahippocampal place area and the retrosplenial complex, playing complementary roles of view specificity and view integration.

Using paradigms that leverage on memory representations of scenes, we have also found that the brain represents scene information that is extended beyond the physical input in memory. This research suggests that the visual system may use the memory as an adaptive mechanism to perceive a broader world beyond the sensory input, or to form a coherent link across the current view of a scene to a recent view that has already gone missing.

Different roles of the parahippocampal place area (PPA) and retrosplenial cortex (RSC) in scene perception

Park & Chun (2009), Neuroimage

The constructive nature of scene perception

Park & Chun (2014). Scene Vision, MIT Press.

Eye movements help link different views in scene-selective cortex

Golomb, Albrecht, Park, & Chun (2011). Cerebral Cortex

Beyond the Edges of a view: Boundary extension in human scene-selective visual cortex

Park, Intraub, Yi, Widders, & Chun (2007). Neuron

Refreshing and integrating visual scenes in scene-selective cortex

Park, Chun, & Johnson (2010). Journal of Cognitive Neuroscience

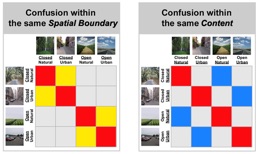

How does the visual system represent multiple scene properties?

What constitutes a scene? Is it objects that consists a scene (e..g, whether the scene contains trees or buildings) or is it the spatial structure of a scene (e.g., whether the space is very open or closed). Using fMRI multi-voxel pattern classification methods, we disentangle the neural representation of different properties in a scene. We find that scenes that share similar spatial structure are represented more similarly in the parahippocampal place area, a region that represents scene information; while scenes that share contents are represented more similarly in the lateral occipital complex, a region that represents object information. These findings give insight into how multiple visual brain regions are involved in analyzing different global properties of a scene.

Park, Brady, Greene, & Oliva, A. (2011). Journal of Neuroscience.

Representing, Perceiving and Remembering the Shape of Visual Space

Oliva, Park, & Konkle, T. (2011). Vision in 3D Environments, Cambridge University Press

CURRENT PROJECTS:

KEY FINDINGS:

Contact Us

Park Lab

Department of Cognitive Science

Johns Hopkins University

119 Krieger Hall

3400 N. Charles Street.

Baltimore, MD 21208

Funding Sources

National Eye Institute, National Institutes of Health

Johns Hopkins University Catalyst Award